September 30, 2015

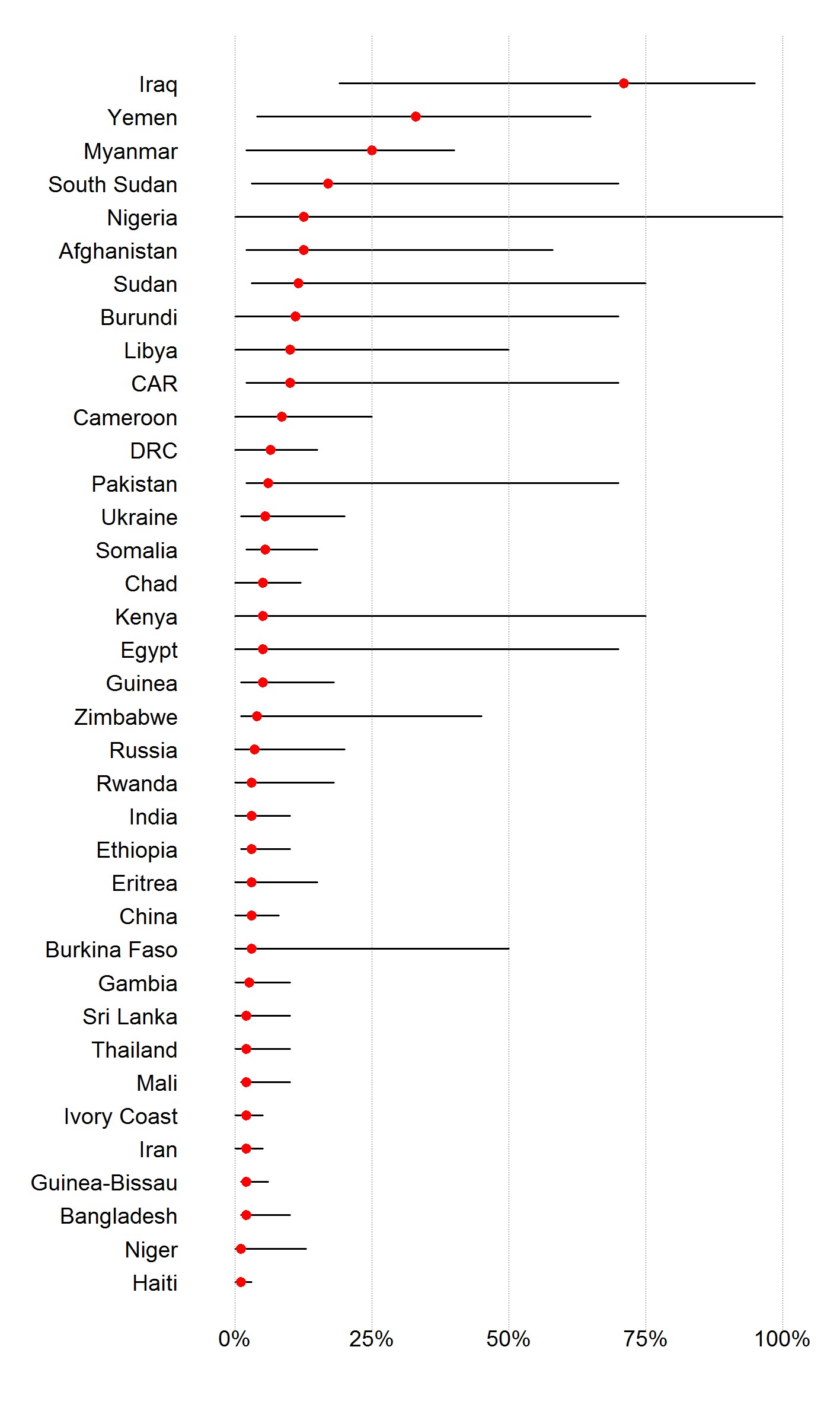

The chart below summarizes our Expert Opinion Pool’s current take on risks of new mass-killing episodes in countries about which we’ve asked them.

In contrast to our statistical risk assessments, which focus exclusively on state-led mass killing, these assessments cover mass killings by any domestic perpetrator, including state security forces, rebel groups, and other non-state militias. In the chart, the red dots mark the collective forecast as of September 27, 2015. The black bars show the range of all individual forecasts made on each question since its launch, providing some indication of the pool's collective uncertainty about each case.

The opinion pool runs all the time, but we last blogged a summary comparison of forecasts on these questions in early July. In the ensuing few months, most of these forecasts have changed little or not at all, and most of the changes that have occurred have been declines in risk. For example, Cameroon saw its estimated risk fall from approximately 20 to 10 percent, and Ukraine experienced a similar drop.

Those declines do not necessarily imply that our pool sees shrinking long-term risks of mass killing in those cases, however. Instead, those declines in many cases are likely to be artifacts of the approaching end of the time period the questions cover.

To allow participants to assign a probability to these events and to be able to assess the accuracy of the resulting forecasts, each question has to cover a discrete time period—here, calendar-year 2015. As the end of that period approaches, participants will usually revise their forecasts downward, because even if structural risk remains comparatively high, there are fewer opportunities for the relevant events to occur. So, if our opinion-pool participants understand the rules of probability and are updating their forecasts, we would expect to see a generic decline in assessed risks as the end of the year draws nearer.

In an ideal world, we would ask our opinion pool questions that would not have a crisp end date but could still be scored and assessed. The scoring is important because it’s how the experts get the feedback that helps them become better forecasters over time, and thus improves the accuracy of opinion pool’s output. Unfortunately, this is a hard technical problem that hasn’t been fully solved yet. We continue to watch other expert-elicitation systems for ideas about how to implement a different approach, but we haven’t yet seen one that we believe is ready to implement.

View All Blog Posts